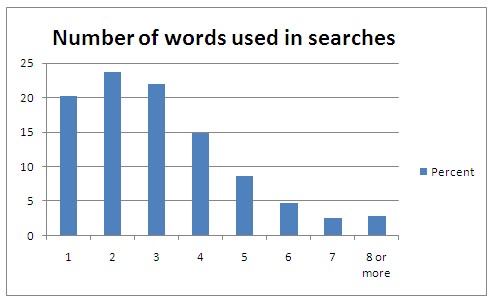

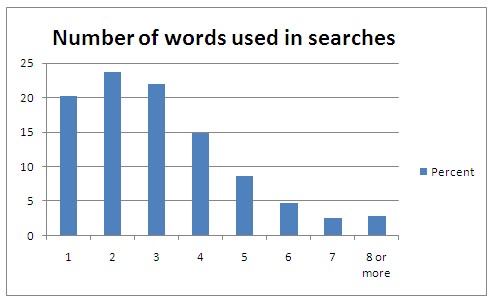

In over 80% of all search queries made, less than five words are used.

According to the Internet monitoring company Hitwise, the distribution of number of words used in search queries looked like this by January 2009. The statistics cover searches made, not people doing searches, which is important. Still, in over 80% of searches, less than 5 words are used in the search query. The most common search query length is 2 words.

Now, we all make a lot of searches, and in some cases we have learnt that typing one or two specific words will give us the site we are looking for on the first page of search hits. Also, a lot of people have learnt that putting a single word XYZ directly in the address field of the browser will take them to http://www.XYZ.com. Doing the same thing in Google Chrome will deliver a search on that word. People are a combination of lazy, practical, and smart – they quickly learn what works and repeats that. When they search for something for real, the more word-rich queries come in to use.

For anyone looking for something specific on the web, the chances of finding it increase if you have the knowledge to utilize the full power of the search engines. Think of it as shifting from first gear of search engine usage to second, third, fourth and fifth.

There are two dimensions to this:

1) Make sure you use the most appropriate and adequate search engine for the information you need to find. This is treated upon further in a separate article.

2) Make sure you know how to tell the search engine exactly what you are looking for, instead of throwing in a bunch of keywords in random order. This is all about making use of search operators and special characters which allows you to specify alot more complex conditions than “I want to see pages that contain these words”.

Searching more effectively with Google

There is a reason why Google is the dominating search engine: they index more, they index quicker, and they are good at understanding what results people are interested in. In fact, Google’s ability to index web content in combination with the powerful search operators specific to Google, has given birth to Google Hacking. Google hacking has nothing to do with breaching Google security. It is about using advanced searching with Google as part of the research and reconnaissance phase of a network system penetration attempt for the purpose of a) spotting targets or b) finding possible points of attack against a target.

Apart from leveraging the advanced search operators of Google in the hunt for exploit opportunities, you will of course benefit greatly in your search for information from being skilled at pushing the right buttons of the Google search engine.

Below is a list which cover what you need to know in order to make Google do a better job for you when searching. Roughly, these search operators can be put in three groups:

1) those that say what to search for, i.e. what words and numbers to match, and

2) those that say where to search, i.e. operators that limit the scope of the search or specify where the match should be,

3) those that are specialized information lookup operators, which make Google return results of a certain kind only

Operators that say WHAT to search for

| 1) “What” operators |

Result / Effect / Meaning |

| secret information |

Will find content that contain each of the words anywhere in the text, but not necessarily side by side |

| “secret information” |

Will match the exact phrase and word order |

| ~secret |

Includes synonyms, alternative spellings and words with adjacent meaning |

| secret information OR intelligence |

Will find content that contain the word secret plus either one of the words information and intelligence |

| intelligence -information |

Will find content that contains the word intelligence while not containing the word information |

| intelligence +secret |

Will search for content that contains intelligence and secret, with secret as required content |

| intelligence-community |

Will find content where the two words exist separated, or written as one piece, or hyphenated |

|

|

| “central * agency” |

The * character serves as wild card for one or more words

Note! When the * character is used between two numbers in a search with no letters, it will function as a multiplication operator, returning the mathematical result multiplying the two numbers. |

| “US” “gov” |

Google automatically includes synonyms and full-word versions of abbreviations. Putting each term in quotes assures that the search is made for exactly those terms. |

| “coup d’etat” 1945..1969 |

Will find pages that contain any number in the range 1945-1969 and the phrase “coup d’etat” |

|

|

Operators that say WHERE to find a match between search term and content

| 2) “Where” operators |

Result / Effect / Meaning |

| define: |

Will look for the search term in word list, dictionary and glossary type of pages, e.g. define:secret |

| define |

Alternative syntax for define:. Will look for the search term in word list, dictionary and glossary type of pages, e.g. define secret |

| intelligence ~glossary |

Will find the word intelligence on pages that are of a glossary or dictionary or encyclopedia type |

| site: |

Will limit the search to include only the internet domain specified, which can be a top domain, a main domain, a sub domain and so on. Examples:site:mil (combine several with the OR operator between them: site:mil OR site:gov)

site:groups.google.com |

| inurl: |

Will limit the search to only look for the search terms in the page URL. This example will show results where either one or both of wiki and sigint are part of the URL:inurl:wiki sigint |

| allinurl: |

Very similar to inurl: but with the difference that all of the words specified must be found in the URL. |

| intitle: |

Will limit the search to only look for the search terms in the title of pages. Title in this context means the web document HTML title, which is what you see written in the browser tab or browser window top frame. |

| allintitle: |

Very similar to intitle: but with the difference that all of the words specified must be found in the page title. |

| inanchor: |

Will limit the search to only look for the search terms in the anchor text of hyperlinks on pages. The anchor text is the text that was turned into a link to some page by the page creator. The anchor text may reveal something about what the page creator thinks about the page linked to, for example “Useful information on security”. |

| allinanchor: |

Very similar to inanchor: but with the difference that all of the words specified must be found in the anchor text. |

| intext: |

Will limit the results to include only pages where the search term was found in the text of the page. |

| allintext: |

Very similar to intext: but with the difference that all of the words specified must be found in the text of the page. |

| filetype: |

Will limit the results to include only files with the file extension specified, e.g. filetype:pdf to get only PDF documents |

| ext: |

Short-hand version of filetype: that provides the exact same result |

| cache: |

Will show the Google cache version of a web site if available, e.g. cache:cia.gov

Note! This cannot be combined with additional search terms or operators |

| related: |

Will show pages that have something in common with or are related to the site you specify, e.g. related:cia.gov

Note! This cannot be combined with additional search terms or operators |

| link: |

Will show pages that contain a link pointing to the URL you specify, e.g. link:www.cia.gov/library |

|

|

Special search operators – valid only on specific Google sites

| 3) Special operators |

Result / Effect / Meaning |

| location: |

news.google.com – presents news search results related to the location, e.g. location:kabul |

| source: |

news.google.com – presents news search results from the source specified, e.g. source:times |

| author: |

groups.google.com – presents posts written by the author specified, e.g. author:einstein |

| group: |

groups.google.com – presents posts made in the group specified, e.g. group:publicintel |

|

|

When looking for information where you only have a vague idea what you should search for, only have parts of a name or only an approximate date range, advanced queries combining several such bits and pieces, involving both the OR operator, phrase quotes, and the * wild card will let you cover all bases and perform one single search that returns all possible matches.

Here are a few interesting examples that apply several of the operators listed above.

- PDF-files published by FBI that talk about interrogation, methods, and deception:

site:fbi.gov ext:pdf +interrogation +methods +deception

- We pages under the .mil top domain where the page title contains the word “staff”, and the page contains a link with the word “login”, excluding PDF-files as well as word documents:

site:mil intitle:staff inanchor:login -ext:pdf -ext:doc

- Excel files published with the word “internal” as part of the URL, with the phrase “internal use only” in the file:

inurl:internal ext:xls OR ext:xlsx “internal use only”

Learn more about how to search with Google:

http://www.googleguide.com

http://www.google.com/support/websearch/bin/answer.py?hl=en&answer=136861&rd=1